An elastic and resilient key-value store

Get StartedContent Addressable

Each piece of information is assigned a unique identifier for self-certification, allowing for optimized data placement, fault tolerance, performance, security and integrity.

Decentralized

As opposed to the popular manager/worker (a.k.a master/slave) model, memo relies on a decentralized architecture that does away with bottlenecks, single points of failure, cascading effects etc.

Policy Based

memo can be customized through policies such as redundancy, encryption, compression, versioning, deduplication etc. so as to fit with your application's needs.

Strongly Consistent

memo's consistency model can be configured, from eventual to strong for the most demanding applications, along with rebalancing delays and more.

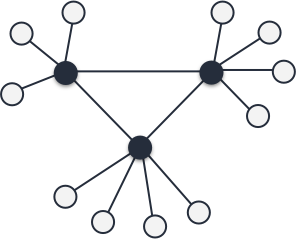

A modern approach to distribution

memo's decentralized architecture brings a number of advantages over manager/worker-based distributed systems.

Managers cannot easily scale

The manager/worker model has been designed for workers to be easily scaled, leaving managers out of the equation and therefore limiting the overall system's scalability.

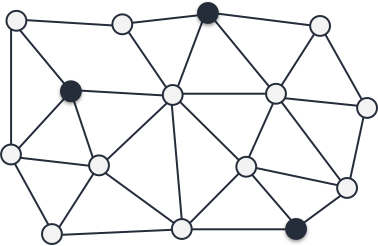

Scalable by nature

Instead of relying on authoritative manager nodes, all the nodes play both the role of manager and worker, collectively participating in maintaining consistency, routing messages, hosting replicas and more.

Managers could be bottlenecks

The manager nodes may get overflowed by requests and fail, leading clients to redirect their requests to the remaining managers which in turn could get overflowed taking down the whole system through a cascading effect.

Hard to overflow

Since every node can process a client's request, it is far more complicated to overflow the system, hence removing bottlenecks, single points of failure and cascading effects.

Managers have limited capacity

The manager nodes, being limited in number, have intrinsec limited capacity to handle a large number of client requests in parallel.

Unlimited through total distribution

Thanks to the natural distribution of the decentralized model, the more nodes in the system, the faster requests can be processed, benefiting from the bandwidth and power of all the nodes.

Managers are ideal targets

Because manager nodes orchestrate the system but also handle access control, such nodes are critical and an ideal target for attackers.

No concentration of authority

Since no node is more powerful, knowledgeable, authoritative etc., the system is far more complicated to attack.

Managers maintain consistency

On top of handling clients' specific requests, orchestrating the system etc., the manager nodes are also responsible for running an expensive consensus algorithm, hence limiting their ability to process many update requests in parallel.

Per-value quorums

Instead of relying on a single quorum of manager nodes for maintaing consistency, memo makes use of per-value quorums, allowing for better performance (increased parallism), fault tolerance and scalability.

Example

The example below illustrates how to manipulate memo through its gRPC API.

Get Started Documentationpackage main

import (

"fmt"

"golang.org/x/net/context"

"google.golang.org/grpc"

"google.golang.org/grpc/grpclog"

kvs "memo/kvs"

"os"

)

[...]

connection, error := grpc.Dial(IP:PORT, grpc.WithInsecure())

defer connection.Close()

store := kvs.NewKeyValueStoreClient(connection)

store.Insert(context.Background(),

&kvs.InsertRequest{Key: "foo", Value: []byte("bar")})

store.Update(context.Background(),

&kvs.UpdateRequest{Key: "foo", Value: []byte("baz")})

response, error = store.Fetch(context.Background(),

&kvs.FetchRequest{Key: "foo"})

fmt.Printf("%s\n", string(response.Value))

store.Delete(context.Background(),

&kvs.DeleteRequest{Key: "foo"})#include <memo_kvs.grpc.pb.h>

#include <grpc++/grpc++.h>

[...]

auto channel = grpc::CreateChannel(IP:PORT, grpc::InsecureChannelCredentials());

grpc::ClientContext context;

memo::kvs::KeyValueStore::Stub store = memo::kvs::KeyValueStore::NewStub(channel);

{

memo::kvs::InsertRequest request;

memo::kvs::InsertResponse response;

request.set_key("foo");

request.set_value("bar");

store.Insert(&context, request, &response);

}

{

memo::kvs::UpdateRequest request;

memo::kvs::UpdateResponse response;

request.set_key("foo");

request.set_value("baz");

store.Update(&context, request, &response);

}

{

memo::kvs::FetchRequest request;

memo::kvs::FetchResponse response;

request.set_key("foo");

store.Fetch(&context, request, &response);

std::cout << response.value() << std::endl;

}

{

memo::kvs::DeleteRequest request;

memo::kvs::DeleteResponse response;

request.set_key("foo");

store.Delete(&context, request, &response);

}#! /usr/bin/env python3

import grpc

import memo_kvs_pb2_grpc

import memo_kvs_pb2 as kvs

[...]

channel = grpc.insecure_channel(IP:PORT)

store = memo_kvs_pb2_grpc.KeyValueStoreStub(channel)

store.Insert(kvs.InsertRequest(key = 'foo', value = 'bar'.encode('utf-8')))

store.Update(kvs.UpdateRequest(key = 'foo', value = 'baz'.encode('utf-8')))

response = store.Fetch(kvs.FetchRequest(key = 'foo'))

print(response.value.decode('utf-8'))

store.Delete(kvs.DeleteRequest(key = 'foo'))

Talk to us on the Internet!

Ask questions to our team or our contributors, get involved in the project...